- 構成/導入環境

- 参考サイト

- kubernetes導入

構成/導入環境

適当なイメージ

参考サイト

kubernetes導入

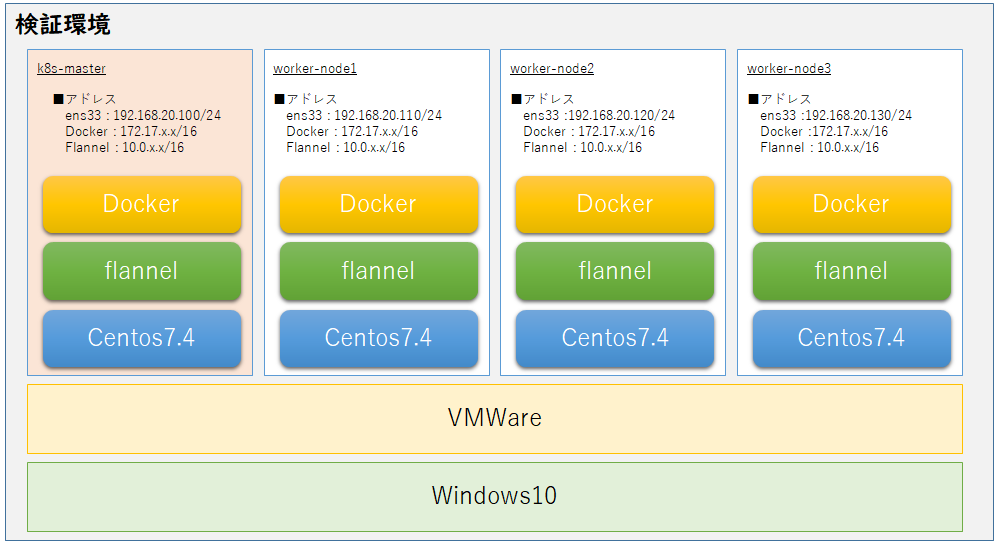

仮想マシン準備

仮想マシンをデブロイ

- k8s-master : 1台

- worker-node : 3台 (2台でも可)

仮想マシンの初期設定

1.hosts編集

※workerを追加すると導入できないという事象もあるらしいが今回は記述していても問題なかった

# vi /etc/hosts

■■■■ 追加 ■■■■

192.168.20.100 k8s-master

192.168.20.110 worker-node1

192.168.20.120 worker-node2

192.168.20.130 worker-node3

■■■■■■■■■■■■■■■■

2.selinux停止

# setenforce 0

# vi /etc/selinux/config

■■■■ 編集 ■■■■

SELINUX=permissive

■■■■■■■■■■■■■■■■

3.firewall無効 (任意)

今回は、無効とした。

が、Firewallを動かす場合は、ポート開放が必要となるので、Installing kubeadm | Kubernetes の"Check required ports"を参考にして開放すると大丈夫(と思う)。

仮想マシンのMACアドレスとUUID確認

各VMで一意の値であること

■UUID

[root@k8s-master ~]# cat /sys/class/dmi/id/product_uuid

564D23E9-85F6-067B-207D-AEF289B2C248

■MACアドレス

[root@k8s-master ~]# ip link

~省略~

3: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP mode DEFAULT qlen 1000

link/ether 00:0c:29:b2:c2:48 brd ff:ff:ff:ff:ff:ff

----公式サイトより抜粋---

ハードウェアデバイスには一意のアドレスが設定されている可能性がありますが、一部の仮想マシンには同じ値が設定されている可能性があります。

Kubernetesはこれらの値を使用して、クラスタ内のノードを一意に識別します。

これらの値が各ノードに固有でない場合、インストール処理が失敗することがあります。

------------------------------------

dockerインストール

対象:すべてのマシンにこれらのパッケージをインストール

dockerインストール

導入するkubernetesのバージョンでサポートされるDockerのバージョンでインストールが必要となる。

----

On each of your machines, install Docker. Version v1.12 is recommended, but v1.11, v1.13 and 17.03 are known to work as well. Versions 17.06+ might work, but have not yet been tested and verified by the Kubernetes node team.

----

#今回は"Version: 17.03.2-ce"をインストールする。

■インストール

# yum install -y yum-utils device-mapper-persistent-data lvm2

# yum-config-manager \

--add-repo https://download.docker.com/linux/centos/docker-ce.repo

# yum install -y --setopt=obsoletes=0 docker-ce-17.03.2.ce-1.el7.centos

■起動設定

# systemctl start docker

# systemctl enable docker

■確認

# docker version

kubeadm、kubelet、kubectlのインストール

Kubernetes用Repository追加

対象:すべてのマシンにこれらのパッケージをインストール

repo作成

repository作成

[root@worker-node1 ~]# cat <<EOF > /etc/yum.repos.d/kubernetes.repo

> [kubernetes]

> name=Kubernetes

> baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64

> enabled=1

> gpgcheck=1

> repo_gpgcheck=1

> gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

> EOF

[root@worker-node1 ~]#

kubelet kubeadm kubectlのインストール

[root@k8s-master ~]# yum install -y kubelet kubeadm kubectl

読み込んだプラグイン:fastestmirror

Loading mirror speeds from cached hostfile

* base: ftp.tsukuba.wide.ad.jp

* extras: ftp.tsukuba.wide.ad.jp

* updates: ftp.tsukuba.wide.ad.jp

依存性の解決をしています

--> トランザクションの確認を実行しています。

---> パッケージ kubeadm.x86_64 0:1.9.3-0 を インストール

--> 依存性の処理をしています: kubernetes-cni のパッケージ: kubeadm-1.9.3-0.x86_64

---> パッケージ kubectl.x86_64 0:1.9.3-0 を インストール

---> パッケージ kubelet.x86_64 0:1.9.3-0 を インストール

--> 依存性の処理をしています: socat のパッケージ: kubelet-1.9.3-0.x86_64

--> トランザクションの確認を実行しています。

---> パッケージ kubernetes-cni.x86_64 0:0.6.0-0 を インストール

---> パッケージ socat.x86_64 0:1.7.3.2-2.el7 を インストール

--> 依存性解決を終了しました。

依存性を解決しました

=============================================================

Package アーキテクチャー

バージョン リポジトリー

容量

=============================================================

インストール中:

kubeadm x86_64 1.9.3-0 kubernetes 16 M

kubectl x86_64 1.9.3-0 kubernetes 8.9 M

kubelet x86_64 1.9.3-0 kubernetes 17 M

依存性関連でのインストールをします:

kubernetes-cni x86_64 0.6.0-0 kubernetes 8.6 M

socat x86_64 1.7.3.2-2.el7 base 290 k

~省略~

インストール:

kubeadm.x86_64 0:1.9.3-0 kubectl.x86_64 0:1.9.3-0

kubelet.x86_64 0:1.9.3-0

依存性関連をインストールしました:

kubernetes-cni.x86_64 0:0.6.0-0

socat.x86_64 0:1.7.3.2-2.el7

完了しました!

[root@k8s-master ~]#

kubelet起動

対象:すべてのマシンにこれらのパッケージをインストール

[root@worker-node1 ~]# systemctl enable kubelet

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /etc/systemd/system/kubelet.service.

[root@worker-node1 ~]# systemctl start kubelet

[root@worker-node1 ~]#

#バイパス設定

[root@worker-node3 ~]# cat <<EOF > /etc/sysctl.d/k8s.conf

> net.bridge.bridge-nf-call-ip6tables = 1

> net.bridge.bridge-nf-call-iptables = 1

> EOF

[root@worker-node3 ~]# sysctl --system

~ 省略 ~

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

* Applying /etc/sysctl.conf ...

[root@k8s-master ~]#

kubeletが使用するcgroupドライバ確認と修正

対象:マスターノード(k8s-master)

Dockerのcgroupドライバとkubeletの設定が一致しない場合は、kubeletの設定をDockerのcgroupドライバに合わせて変更する。

[root@k8s-master ~]# docker info | grep -i cgroup

Cgroup Driver: cgroupfs

[root@k8s-master ~]# cat /etc/systemd/system/kubelet.service.d/10-kubeadm.conf

[Service]

Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf"

Environment="KUBELET_SYSTEM_PODS_ARGS=--pod-manifest-path=/etc/kubernetes/manifests --allow-privileged=true"

Environment="KUBELET_NETWORK_ARGS=--network-plugin=cni --cni-conf-dir=/etc/cni/net.d --cni-bin-dir=/opt/cni/bin"

Environment="KUBELET_DNS_ARGS=--cluster-dns=10.96.0.10 --cluster-domain=cluster.local"

Environment="KUBELET_AUTHZ_ARGS=--authorization-mode=Webhook --client-ca-file=/etc/kubernetes/pki/ca.crt"

Environment="KUBELET_CADVISOR_ARGS=--cadvisor-port=0"

Environment="KUBELET_CGROUP_ARGS=--cgroup-driver=systemd"

Environment="KUBELET_CERTIFICATE_ARGS=--rotate-certificates=true --cert-dir=/var/lib/kubelet/pki"

ExecStart=

ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_SYSTEM_PODS_ARGS $KUBELET_NETWORK_ARGS $KUBELET_DNS_ARGS $KUBELET_AUTHZ_ARGS $KUBELET_CADVISOR_ARGS $KUBELET_CGROUP_ARGS $KUBELET_CERTIFICATE_ARGS $KUBELET_EXTRA_ARGS

[root@k8s-master ~]#

#設定が異なるので修正

■設定修正

[root@k8s-master ~]# sed -i "s/cgroup-driver=systemd/cgroup-driver=cgroupfs/g" /etc/systemd/system/kubelet.service.d/10-kubeadm.conf

■確認

[root@k8s-master ~]# cat /etc/systemd/system/kubelet.service.d/10-kubeadm.conf

[Service]

Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf"

Environment="KUBELET_SYSTEM_PODS_ARGS=--pod-manifest-path=/etc/kubernetes/manifests --allow-privileged=true"

Environment="KUBELET_NETWORK_ARGS=--network-plugin=cni --cni-conf-dir=/etc/cni/net.d --cni-bin-dir=/opt/cni/bin"

Environment="KUBELET_DNS_ARGS=--cluster-dns=10.96.0.10 --cluster-domain=cluster.local"

Environment="KUBELET_AUTHZ_ARGS=--authorization-mode=Webhook --client-ca-file=/etc/kubernetes/pki/ca.crt"

Environment="KUBELET_CADVISOR_ARGS=--cadvisor-port=0"

Environment="KUBELET_CGROUP_ARGS=--cgroup-driver=cgroupfs"

Environment="KUBELET_CERTIFICATE_ARGS=--rotate-certificates=true --cert-dir=/var/lib/kubelet/pki"

ExecStart=

ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_SYSTEM_PODS_ARGS $KUBELET_NETWORK_ARGS $KUBELET_DNS_ARGS $KUBELET_AUTHZ_ARGS $KUBELET_CADVISOR_ARGS $KUBELET_CGROUP_ARGS $KUBELET_CERTIFICATE_ARGS $KUBELET_EXTRA_ARGS

[root@k8s-master ~]#

■プロセス再起動

[root@k8s-master ~]# systemctl daemon-reload

[root@k8s-master ~]# systemctl restart kubelet

[root@k8s-master ~]#

SWAPの無効化

対象:全て

SWAPの無効化

■SWAP無効化

[root@k8s-master ~]# swapoff -a

[root@k8s-master ~]# cat /proc/swaps

Filename Type Size Used Priority

[root@k8s-master ~]#

■設定ファイル修正

[root@k8s-master ~]# vi /etc/fstab

■■■ 修正 ■■■

・以下の行をコメントアウトする

/dev/mapper/centos-swap swap swap defaults 0 0

↓↓↓↓

↓↓↓↓

#/dev/mapper/centos-swap swap swap defaults 0 0

■■■■■■■■■■■■■■

#このコマンド"swapoff -a"設定のみではk8s-master再起動後にSWAPが有効となるようだ。

[root@k8s-master ~]# cat /proc/swaps

Filename Type Size Used Priority

[root@k8s-master ~]# reboot

Last login: Sun Mar 11 10:58:12 2018 from 192.168.20.3

[root@k8s-master ~]# cat /proc/swaps

Filename Type Size Used Priority

/dev/dm-1 partition 4063228 0 -1

[root@k8s-master ~]#

kubeadmを使用したクラスタの作成

マスターを初期化する

対象:マスターノード(k8s-master)

マスター初期化

pod-network-cidrは、後述のflannelを追加するためにオプション追加を行う。

外部"https://dl.k8s.io"の名前解決ができる必要があり、接続性がないとエラーとなるみたい。

unable to get URL "https://dl.k8s.io/release/stable-1.10.txt": Get https://dl.k8s.io/release/stable-1.10.txt: dial tcp: lookup dl.k8s.io on 1.1.1.1:53: read udp 10.10.0.201:59476->1.1.1.1:53: i/o timeout

オプション:--apiserver-advertise-address="Masterノードアドレス"

[root@k8s-master ~]# kubeadm init --apiserver-advertise-address=192.168.20.100 --pod-network-cidr=10.0.0.0/16

[init] Using Kubernetes version: v1.9.3

[init] Using Authorization modes: [Node RBAC]

[preflight] Running pre-flight checks.

[WARNING FileExisting-crictl]: crictl not found in system path

[preflight] Starting the kubelet service

[certificates] Generated ca certificate and key.

[certificates] Generated apiserver certificate and key.

[certificates] apiserver serving cert is signed for DNS names [k8s-master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.20.100]

[certificates] Generated apiserver-kubelet-client certificate and key.

[certificates] Generated sa key and public key.

[certificates] Generated front-proxy-ca certificate and key.

[certificates] Generated front-proxy-client certificate and key.

[certificates] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[kubeconfig] Wrote KubeConfig file to disk: "admin.conf"

[kubeconfig] Wrote KubeConfig file to disk: "kubelet.conf"

[kubeconfig] Wrote KubeConfig file to disk: "controller-manager.conf"

[kubeconfig] Wrote KubeConfig file to disk: "scheduler.conf"

[controlplane] Wrote Static Pod manifest for component kube-apiserver to "/etc/kubernetes/manifests/kube-apiserver.yaml"

[controlplane] Wrote Static Pod manifest for component kube-controller-manager to "/etc/kubernetes/manifests/kube-controller-manager.yaml"

[controlplane] Wrote Static Pod manifest for component kube-scheduler to "/etc/kubernetes/manifests/kube-scheduler.yaml"

[etcd] Wrote Static Pod manifest for a local etcd instance to "/etc/kubernetes/manifests/etcd.yaml"

[init] Waiting for the kubelet to boot up the control plane as Static Pods from directory "/etc/kubernetes/manifests".

[init] This might take a minute or longer if the control plane images have to be pulled.

[apiclient] All control plane components are healthy after 27.002085 seconds

[uploadconfig]?Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[markmaster] Will mark node k8s-master as master by adding a label and a taint

[markmaster] Master k8s-master tainted and labelled with key/value: node-role.kubernetes.io/master=""

[bootstraptoken] Using token: 856d03.01bac1b8753af2ad

[bootstraptoken] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstraptoken] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstraptoken] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstraptoken] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: kube-dns

[addons] Applied essential addon: kube-proxy

Your Kubernetes master has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of machines by running the following on each node

as root:

kubeadm join --token b7f9ac.00c9f9fd1e050971 192.168.20.100:6443 --discovery-token-ca-cert-hash sha256:9980353432aca250e481bd0dc9750479c3b4bc2193db7fdeb0499821b3217987

[root@k8s-master ~]#

あと使用するコマンド(青字)のconfigはコピーして控えておく

・ユーザがkubectlを使用してクラスタにアクセスする設定

・worker-nodeがKubernetesクラスタにjoinする設定

#kubeadmのオプション

■kubeadmのオプション

Usage:

kubeadm [command]

Available Commands:

alpha Experimental sub-commands not yet fully functional.

completion Output shell completion code for the specified shell (bash or zsh).

config Manage configuration for a kubeadm cluster persisted in a ConfigMap in the cluster.

init Run this command in order to set up the Kubernetes master.

join Run this on any machine you wish to join an existing cluster

reset Run this to revert any changes made to this host by 'kubeadm init' or 'kubeadm join'.

token Manage bootstrap tokens.

upgrade Upgrade your cluster smoothly to a newer version with this command.

version Print the version of kubeadm

Use "kubeadm [command] --help" for more information about a command.

■kubeadm initのオプション

[root@k8s-master ~]# kubeadm init --help

Run this command in order to set up the Kubernetes master.

Usage:

kubeadm init [flags]

Flags:

--apiserver-advertise-address string The IP address the API Server will advertise it's listening on. Specify '0.0.0.0' to use the address of the default network interface.

--apiserver-bind-port int32 Port for the API Server to bind to. (default 6443)

--apiserver-cert-extra-sans stringSlice Optional extra Subject Alternative Names (SANs) to use for the API Server serving certificate. Can be both IP addresses and DNS names.

--cert-dir string The path where to save and store the certificates. (default "/etc/kubernetes/pki")

--config string Path to kubeadm config file. WARNING: Usage of a configuration file is experimental.

--cri-socket string Specify the CRI socket to connect to. (default "/var/run/dockershim.sock")

--dry-run Don't apply any changes; just output what would be done.

--feature-gates string A set of key=value pairs that describe feature gates for various features. Options are:

CoreDNS=true|false (ALPHA - default=false)

DynamicKubeletConfig=true|false (ALPHA - default=false)

SelfHosting=true|false (ALPHA - default=false)

StoreCertsInSecrets=true|false (ALPHA - default=false)

--ignore-preflight-errors stringSlice A list of checks whose errors will be shown as warnings. Example: 'IsPrivilegedUser,Swap'. Value 'all' ignores errors from all checks.

--kubernetes-version string Choose a specific Kubernetes version for the control plane. (default "stable-1.9")

--node-name string Specify the node name.

--pod-network-cidr string Specify range of IP addresses for the pod network. If set, the control plane will automatically allocate CIDRs for every node.

--service-cidr string Use alternative range of IP address for service VIPs. (default "10.96.0.0/12")

--service-dns-domain string Use alternative domain for services, e.g. "myorg.internal". (default "cluster.local")

--skip-token-print Skip printing of the default bootstrap token generated by 'kubeadm init'.

--token string The token to use for establishing bidirectional trust between nodes and masters.

--token-ttl duration The duration before the bootstrap token is automatically deleted. If set to '0', the token will never expire. (default 24h0m0s)

[root@k8s-master ~]#

クラスタアクセス設定とステータス確認

上記手順で表示されたコマンドを投入する

■アクセス設定

[root@k8s-master ~]# mkdir -p $HOME/.kube

[root@k8s-master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@k8s-master ~]#

■クラスタステータス確認

[root@k8s-master ~]# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health": "true"}

[root@k8s-master ~]#

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master NotReady master 2m v1.9.3

[root@k8s-master ~]#

■Config確認

[root@k8s-master ~]# kubectl config view

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: REDACTED

server: https://192.168.20.100:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kubernetes-admin

name: kubernetes-admin@kubernetes

current-context: kubernetes-admin@kubernetes

kind: Config

preferences: {}

users:

- name: kubernetes-admin

user:

client-certificate-data: REDACTED

client-key-data: REDACTED

[root@k8s-master ~]#

Flannel導入(オーバーレイネットワーク)

対象:マスターノード(k8s-master)

kube-flannel.yml をダウンロード

[root@k8s-master ~]# curl -L -O https://raw.githubusercontent.com/coreos/flannel/v0.9.1/Documentation/kube-flannel.yml

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 2801 100 2801 0 0 7913 0 --:--:-- --:--:-- --:--:-- 7912

[root@k8s-master ~]# ls -l kube-flannel.yml

-rw-r--r--. 1 root root 2801 3月 11 12:46 kube-flannel.yml

[root@k8s-master ~]#

kube-flannel-rbac.yml をダウンロード

[root@k8s-master ~]# curl -L -O https://raw.githubusercontent.com/coreos/flannel/master/Documentation/k8s-manifests/kube-flannel-rbac.yml

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 857 100 857 0 0 3401 0 --:--:-- --:--:-- --:--:-- 3414

[root@k8s-master ~]#

[root@k8s-master ~]# ls -l kube-flannel-rbac.yml

-rw-r--r--. 1 root root 857 3月 11 13:05 kube-flannel-rbac.yml

[root@k8s-master ~]#

kube-flannel.yml の修正(パラメータ:-pod-network-cidr)

[root@k8s-master ~]# vi kube-flannel.yml

■■■ 修正 ■■■

net-conf.json: |

{

"Network": "10.244.0.0/16", ---> "Network": "10.0.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

■■■■■■■■■■■■■■

Flannelのインストール

[root@k8s-master ~]# kubectl apply -f ./kube-flannel-rbac.yml

clusterrole "flannel" created

clusterrolebinding "flannel" created

[root@k8s-master ~]#

[root@k8s-master ~]# kubectl apply -f ./kube-flannel.yml

clusterrole "flannel" configured

clusterrolebinding "flannel" configured

serviceaccount "flannel" created

configmap "kube-flannel-cfg" created

daemonset "kube-flannel-ds" created

[root@k8s-master ~]#

これでFlannelのインストール完了。

起動確認

[root@k8s-master ~]# kubectl get pod --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system etcd-k8s-master 1/1 Running 0 2m

kube-system kube-apiserver-k8s-master 1/1 Running 0 2m

kube-system kube-controller-manager-k8s-master 1/1 Running 0 2m

kube-system kube-dns-6f4fd4bdf-hs2hl 3/3 Running 0 1h

kube-system kube-flannel-ds-dz2fn 1/1 Running 0 2m

kube-system kube-proxy-m4n2k 1/1 Running 0 1h

kube-system kube-scheduler-k8s-master 1/1 Running 0 2m

[root@k8s-master ~]#

kube-dnsとflannelのPodが起動してSTATUSが"Running"になってれば問題ないようです。

ワークロードのスケジューリング設定

[root@k8s-master ~]# kubectl taint nodes --all node-role.kubernetes.io/master-

node "k8s-master" untainted

[root@k8s-master ~]#

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 2m v1.9.3

[root@k8s-master ~]#

Node追加

対象:ワーカーノード(worker-node1 , worker-node2 , worker-node3)

node追加

kubeadm initを実行時に表示されたコマンドを使用する。

#worker-node1

[root@worker-node1 ~]# kubeadm join --token b7f9ac.00c9f9fd1e050971 192.168.20.100:6443 --discovery-token-ca-cert-hash sha256:9980353432aca250e481bd0dc9750479c3b4bc2193db7fdeb0499821b3217987

[preflight] Running pre-flight checks.

[WARNING FileExisting-crictl]: crictl not found in system path

[discovery] Trying to connect to API Server "192.168.20.100:6443"

[discovery] Created cluster-info discovery client, requesting info from "https://192.168.20.100:6443"

[discovery] Requesting info from "https://192.168.20.100:6443" again to validate TLS against the pinned public key

[discovery] Cluster info signature and contents are valid and TLS certificate validates against pinned roots, will use API Server "192.168.20.100:6443"

[discovery] Successfully established connection with API Server "192.168.20.100:6443"

This node has joined the cluster:

* Certificate signing request was sent to master and a response

was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the master to see this node join the cluster.

[root@worker-node1 ~]#

#k8s-masterで確認

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 1h v1.9.3

worker-node1 Ready <none> 58s v1.9.3 ←登録される

[root@k8s-master ~]#

#残りのworker-noed2台の登録後に確認

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 1h v1.9.3

worker-node1 Ready <none> 4m v1.9.3 ←登録される

worker-node2 Ready <none> 3m v1.9.3 ←登録される

worker-node3 Ready <none> 3m v1.9.3 ←登録される

[root@k8s-master ~]#

無事に3台のワーカーノードが登録された。

Pod作成

対象:k8s-master

YAML作成

nginxを起動させるYAML作成

[root@k8s-master work]# vi nginx-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

spec:

containers:

- name: nginx-container

image: nginx

ports:

- containerPort: 80

POD作成

■作成

[root@k8s-master work]# kubectl create -f nginx-pod.yaml

pod "nginx-pod" created

[root@k8s-master work]#

■確認

[root@k8s-master work]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx-pod 0/1 ContainerCreating 0 4s <none> worker-node1

[root@k8s-master work]#

[root@k8s-master work]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx-pod 0/1 ContainerCreating 0 6s <none> worker-node1

[root@k8s-master work]#

[root@k8s-master work]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx-pod 1/1 Running 0 8s 10.0.3.3 worker-node1

[root@k8s-master work]#

★kubeadm init実施時のエラー

■未対応のDockerバージョンで実施したとき

インストール環境で、Kubernetes : v1.9.3の場合は、

dockerは 17.03 まで対応となるらしいので、最新版を導入すると入れ直しとなる。

対策:指定バージョンのdockerインストール

[root@k8s-master ~]# kubeadm init

[init] Using Kubernetes version: v1.9.3

[init] Using Authorization modes: [Node RBAC]

[preflight] Running pre-flight checks.

[WARNING SystemVerification]: docker version is greater than the most recently validated version. Docker version: 17.12.1-ce. Max validated version: 17.03

[WARNING FileExisting-crictl]: crictl not found in system path

[preflight] Some fatal errors occurred:

[ERROR Swap]: running with swap on is not supported. Please disable swap

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

[root@k8s-master ~]#

----

■スワップが有効の場合のエラー

対策:swapoff -a

[root@k8s-master ~]# kubeadm init

[init] Using Kubernetes version: v1.9.3

[init] Using Authorization modes: [Node RBAC]

[preflight] Running pre-flight checks.

[WARNING FileExisting-crictl]: crictl not found in system path

[preflight] Some fatal errors occurred:

[ERROR Swap]: running with swap on is not supported. Please disable swap

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

[root@k8s-master ~]#

----

■kubeadm でサービス中に kubeadm init 実行時のエラー

対策:動いているサービスを使用する or kubeadm resetで終了させ新規起動させる・・

[root@k8s-master ~]# kubeadm init --apiserver-advertise-address=192.168.20.100 --pod-network-cidr=10.0.0.0/16

[init] Using Kubernetes version: v1.9.4

[init] Using Authorization modes: [Node RBAC]

[preflight] Running pre-flight checks.

[WARNING FileExisting-crictl]: crictl not found in system path

[preflight] Some fatal errors occurred:

[ERROR Port-6443]: Port 6443 is in use

[ERROR Port-10250]: Port 10250 is in use

[ERROR Port-10251]: Port 10251 is in use

[ERROR Port-10252]: Port 10252 is in use

[ERROR FileAvailable--etc-kubernetes-manifests-kube-apiserver.yaml]: /etc/kubernetes/manifests/kube-apiserver.yaml already exists

[ERROR FileAvailable--etc-kubernetes-manifests-kube-controller-manager.yaml]: /etc/kubernetes/manifests/kube-controller-manager.yaml already exists

[ERROR FileAvailable--etc-kubernetes-manifests-kube-scheduler.yaml]: /etc/kubernetes/manifests/kube-scheduler.yaml already exists

[ERROR FileAvailable--etc-kubernetes-manifests-etcd.yaml]: /etc/kubernetes/manifests/etcd.yaml already exists

[ERROR DirAvailable--var-lib-etcd]: /var/lib/etcd is not empty

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

[root@k8s-master ~]#

■X509 certificate signed by unknown authority エラー

対策:再度アクセス設定を行う?

[root@k8s-master ~]# kubectl get pods

Unable to connect to the server: x509: certificate signed by unknown authority (possibly because of "crypto/rsa: verification error" while trying to verify candidate authority certificate "kubernetes")

[root@k8s-master ~]#

■再度アクセス設定を実施

[root@k8s-master ~]# mkdir -p $HOME/.kube

[root@k8s-master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

■node確認

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master NotReady master 11m v1.9.3

worker-node1 NotReady <none> 7m v1.9.3

worker-node2 NotReady <none> 6m v1.9.3

worker-node3 NotReady <none> 6m v1.9.3

[root@k8s-master ~]#

----

[初期パラメータ]Flannelの設定ファイル

[root@k8s-master ~]# curl https://raw.githubusercontent.com/coreos/flannel/v0.9.1/Documentation/kube-flannel.yml

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"type": "flannel",

"delegate": {

"isDefaultGateway": true

}

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-system

labels:

tier: node

app: flannel

spec:

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: amd64

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.9.1-amd64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conf

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.9.1-amd64

command: [ "/opt/bin/flanneld", "--ip-masq", "--kube-subnet-mgr" ]

securityContext:

privileged: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

[root@k8s-master ~]#