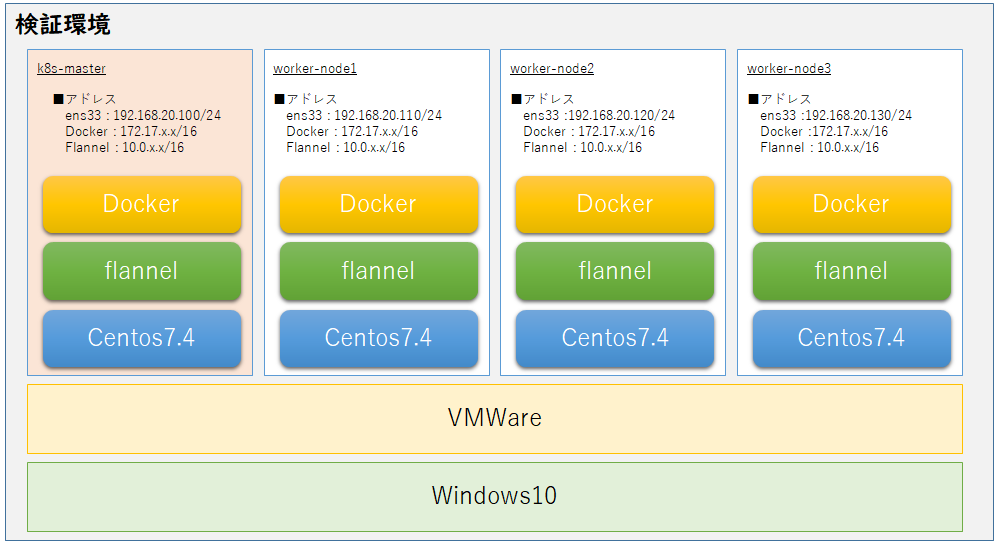

構成/導入環境

適当なイメージ

実施方法

このマスターでノードを削除しなくて再登録する方法が正しいのかは今のところ不明

(masterのノード情報を削除後に新規ノードとして登録 / ノード情報を削除は実施しないがノード登録後にプロセスをrestartさせている方もいたりした)

方法①:再度、前回ノード追加を行ったときのコマンドを入力する

条件"トークン情報が使用することができること"

方法②:トークン作成後にノード登録を行う

条件"トークンの有効期限が切れているとき"

NotReady のステータス状態

操作対象:k8s-masterで実施

3台のworker-noedのうち、worker-node3のステータスが NotReady となっている。

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 3d v1.9.3

worker-node1 Ready <none> 3d v1.9.3

worker-node2 Ready <none> 3d v1.9.3

worker-node3 NotReady <none> 3d v1.9.3

[root@k8s-master ~]#

#原因がPCがスリープモードになりVM上で何かが起きた?なぜworker-node3のみがNotReadyとなっているのかは不明。

[root@k8s-master ~]# kubectl describe nodes

Name: k8s-master

Roles: master

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/hostname=k8s-master

node-role.kubernetes.io/master=

Annotations: flannel.alpha.coreos.com/backend-data={"VtepMAC":"4e:4a:d5:87:49:2d"}

flannel.alpha.coreos.com/backend-type=vxlan

flannel.alpha.coreos.com/kube-subnet-manager=true

flannel.alpha.coreos.com/public-ip=192.168.20.100

node.alpha.kubernetes.io/ttl=0

volumes.kubernetes.io/controller-managed-attach-detach=true

Taints: <none>

CreationTimestamp: Wed, 14 Mar 2018 21:04:34 +0900

Conditions:

Type Status LastHeartbeatTime LastTransitionTime Reason Message

---- ------ ----------------- ------------------ ------ -------

OutOfDisk False Sun, 18 Mar 2018 00:31:23 +0900 Wed, 14 Mar 2018 21:04:24 +0900 KubeletHasSufficientDisk kubelet has sufficient disk space available

MemoryPressure False Sun, 18 Mar 2018 00:31:23 +0900 Wed, 14 Mar 2018 21:04:24 +0900 KubeletHasSufficientMemory kubelet has sufficient memory available

DiskPressure False Sun, 18 Mar 2018 00:31:23 +0900 Wed, 14 Mar 2018 21:04:24 +0900 KubeletHasNoDiskPressure kubelet has no disk pressure

Ready True Sun, 18 Mar 2018 00:31:23 +0900 Wed, 14 Mar 2018 21:06:44 +0900 KubeletReady kubelet is posting ready status

Addresses:

InternalIP: 192.168.20.100

Hostname: k8s-master

Capacity:

cpu: 1

memory: 3864196Ki

pods: 110

Allocatable:

cpu: 1

memory: 3761796Ki

pods: 110

System Info:

Machine ID: 52dce277a77a481086f4d49e217dea33

System UUID: 564D23E9-85F6-067B-207D-AEF289B2C248

Boot ID: 45ab193b-7765-4de9-ac7b-851db6c77736

Kernel Version: 3.10.0-693.21.1.el7.x86_64

OS Image: CentOS Linux 7 (Core)

Operating System: linux

Architecture: amd64

Container Runtime Version: docker://17.3.2

Kubelet Version: v1.9.3

Kube-Proxy Version: v1.9.3

PodCIDR: 10.0.0.0/24

ExternalID: k8s-master

Non-terminated Pods: (7 in total)

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits

--------- ---- ------------ ---------- --------------- -------------

kube-system etcd-k8s-master 0 (0%) 0 (0%) 0 (0%) 0 (0%)

kube-system kube-apiserver-k8s-master 250m (25%) 0 (0%) 0 (0%) 0 (0%)

kube-system kube-controller-manager-k8s-master 200m (20%) 0 (0%) 0 (0%) 0 (0%)

kube-system kube-dns-6f4fd4bdf-pxl6z 260m (26%) 0 (0%) 110Mi (2%) 170Mi (4%)

kube-system kube-flannel-ds-2ckxj 0 (0%) 0 (0%) 0 (0%) 0 (0%)

kube-system kube-proxy-kntgs 0 (0%) 0 (0%) 0 (0%) 0 (0%)

kube-system kube-scheduler-k8s-master 100m (10%) 0 (0%) 0 (0%) 0 (0%)

Allocated resources:

(Total limits may be over 100 percent, i.e., overcommitted.)

CPU Requests CPU Limits Memory Requests Memory Limits

------------ ---------- --------------- -------------

810m (81%) 0 (0%) 110Mi (2%) 170Mi (4%)

Events: <none>

~ ~ ~ 省略 ~ ~ ~

Name: worker-node3

Roles: <none>

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/hostname=worker-node3

Annotations: flannel.alpha.coreos.com/backend-data={"VtepMAC":"7a:14:3b:25:e8:52"}

flannel.alpha.coreos.com/backend-type=vxlan

flannel.alpha.coreos.com/kube-subnet-manager=true

flannel.alpha.coreos.com/public-ip=192.168.20.130

node.alpha.kubernetes.io/ttl=0

volumes.kubernetes.io/controller-managed-attach-detach=true

Taints: <none>

CreationTimestamp: Wed, 14 Mar 2018 21:08:48 +0900

Conditions:

Type Status LastHeartbeatTime LastTransitionTime Reason Message

---- ------ ----------------- ------------------ ------ -------

OutOfDisk False Fri, 16 Mar 2018 19:54:54 +0900 Wed, 14 Mar 2018 21:08:48 +0900 KubeletHasSufficientDisk kubelet has sufficient disk space available

MemoryPressure Unknown Fri, 16 Mar 2018 19:54:54 +0900 Sat, 17 Mar 2018 21:10:10 +0900 NodeStatusUnknown Kubelet stopped posting node status.

DiskPressure Unknown Fri, 16 Mar 2018 19:54:54 +0900 Sat, 17 Mar 2018 21:10:10 +0900 NodeStatusUnknown Kubelet stopped posting node status.

Ready Unknown Fri, 16 Mar 2018 19:54:54 +0900 Sat, 17 Mar 2018 21:10:10 +0900 NodeStatusUnknown Kubelet stopped posting node status.

Addresses:

InternalIP: 192.168.20.130

Hostname: worker-node3

Capacity:

cpu: 1

memory: 3864196Ki

pods: 110

Allocatable:

cpu: 1

memory: 3761796Ki

pods: 110

System Info:

Machine ID: 52dce277a77a481086f4d49e217dea33

System UUID: 564D65D7-E7F9-6E68-87A2-6D8126EBECB3

Boot ID: 4b4e0789-0d46-406d-9d72-be96e2d97fb6

Kernel Version: 3.10.0-693.21.1.el7.x86_64

OS Image: CentOS Linux 7 (Core)

Operating System: linux

Architecture: amd64

Container Runtime Version: docker://17.3.2

Kubelet Version: v1.9.3

Kube-Proxy Version: v1.9.3

PodCIDR: 10.0.1.0/24

ExternalID: worker-node3

Non-terminated Pods: (3 in total)

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits

--------- ---- ------------ ---------- --------------- -------------

kube-system kube-flannel-ds-xgh7m 0 (0%) 0 (0%) 0 (0%) 0 (0%)

kube-system kube-proxy-b8d9q 0 (0%) 0 (0%) 0 (0%) 0 (0%)

kube-system kubernetes-dashboard-747dc9d765-tskv7 0 (0%) 0 (0%) 0 (0%) 0 (0%)

Allocated resources:

(Total limits may be over 100 percent, i.e., overcommitted.)

CPU Requests CPU Limits Memory Requests Memory Limits

------------ ---------- --------------- -------------

0 (0%) 0 (0%) 0 (0%) 0 (0%)

Events: <none>

[root@k8s-master ~]#

実施

①再度nodes追加を実施

操作対象:worker-node3で実施

トークン確認

[root@k8s-master ~]# kubeadm token list

TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS

e36f31.d0b818c324d4a61c 23h 2018-03-19T01:22:31+09:00 authentication,signing <none> system:bootstrappers:kubeadm:default-node-token

ノード追加

コマンド:kubeadm join --token e36f31.d0b818c324d4a61c 192.168.20.100:6443 --discovery-token-ca-cert-hash sha256:a76319319・・・・・

赤字のところのトークンがトークン確認するときに表示されている内容と一致すること

[root@worker-node3 ~]# kubeadm join --token e36f31.d0b818c324d4a61c 192.168.20.100:6443 --discovery-token-ca-cert-hash sha256:a763193190162b0fba12a1c2b0f5da6fc31163f037e499297347751738cef2b6

[preflight] Running pre-flight checks.

[WARNING FileExisting-crictl]: crictl not found in system path

[discovery] Trying to connect to API Server "192.168.20.100:6443"

[discovery] Created cluster-info discovery client, requesting info from "https://192.168.20.100:6443"

[discovery] Requesting info from "https://192.168.20.100:6443" again to validate TLS against the pinned public key

[discovery] Cluster info signature and contents are valid and TLS certificate validates against pinned roots, will use API Server "192.168.20.100:6443"

[discovery] Successfully established connection with API Server "192.168.20.100:6443"

This node has joined the cluster:

* Certificate signing request was sent to master and a response

was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the master to see this node join the cluster.

[root@worker-node3 ~]#

nodesステータス確認

無事にworker-node3のステータスが Ready となる。

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 3d v1.9.3

worker-node1 Ready <none> 3d v1.9.3

worker-node2 Ready <none> 3d v1.9.3

worker-node3 Ready <none> 12m v1.9.3

[root@k8s-master ~]#

②トークン作成後、nodes追加を実施

操作対象:worker-node3で実施

トークン確認

#有効なトークンが無い場合は表示され無い

[root@k8s-master ~]# kubeadm token list

TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS

[root@k8s-master ~]#

ノード追加用コマンド確認(前回登録時のコマンド)

前回ノード追加で使用したコマンドとトークン情報が変わっている。

コマンド:kubeadm join --token ac9407.2cfb4609931d11b3 192.168.20.100:6443 --discovery-token-ca-cert-hash sha256:a76319・・・・・

前回:ac9407.2cfb4609931d11b3

トークンリスト:無し

新しくトークン作成

[root@k8s-master ~]# kubeadm token create

e36f31.d0b818c324d4a61c

[root@k8s-master ~]#

ノード追加

コマンド:kubeadm join --token e36f31.d0b818c324d4a61c 192.168.20.100:6443 --discovery-token-ca-cert-hash sha256:a76319319・・・・・

赤字のところのトークンを作成したトークン情報に置き換える

[root@worker-node3 ~]# kubeadm join --token e36f31.d0b818c324d4a61c 192.168.20.100:6443 --discovery-token-ca-cert-hash sha256:a763193190162b0fba12a1c2b0f5da6fc31163f037e499297347751738cef2b6

[preflight] Running pre-flight checks.

[WARNING FileExisting-crictl]: crictl not found in system path

[discovery] Trying to connect to API Server "192.168.20.100:6443"

[discovery] Created cluster-info discovery client, requesting info from "https://192.168.20.100:6443"

[discovery] Requesting info from "https://192.168.20.100:6443" again to validate TLS against the pinned public key

[discovery] Cluster info signature and contents are valid and TLS certificate validates against pinned roots, will use API Server "192.168.20.100:6443"

[discovery] Successfully established connection with API Server "192.168.20.100:6443"

This node has joined the cluster:

* Certificate signing request was sent to master and a response

was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the master to see this node join the cluster.

[root@worker-node3 ~]#

nodesステータス確認

無事にworker-node3のステータスが Ready となる。

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 3d v1.9.3

worker-node1 Ready <none> 3d v1.9.3

worker-node2 Ready <none> 3d v1.9.3

worker-node3 Ready <none> 6m v1.9.3

[root@k8s-master ~]#

[root@k8s-master ~]#